California has passed new laws that redefine how AI systems must disclose, label, and safeguard their interactions with users. The AI Transparency Act and Companion Chatbot Law set new standards for transparency, accountability, and user protection. Every founder using or building AI should understand them to keep their company compliant and ahead of regulation.

Both the AI Transparency Act (AB 853) and the Companion Chatbot Law (SB 243) were signed into law on October 13, 2025. Key obligations take effect in stages: from August 2, 2026 for covered AI providers, January 1, 2027 for large platforms and hosting services, and January 1, 2028 for device manufacturers. Chatbot operators must begin annual safety reporting by July 1, 2027.

California has passed two major AI laws that redefine how companies must build, label, and operate AI products: the AI Transparency Act (AB 853) and the Companion Chatbot Law (SB 243).

These rules do not just target big tech companies: they set a new standard for how AI should be built and disclosed. California’s framework is likely to influence regulations across the United States, meaning that even companies based elsewhere will need to align with these expectations. For founders, this matters because compliance now affects product design, user trust, and even fundraising. Transparent, responsible AI will soon be the expectation, not a bonus.

The updated Act expands to cover large online platforms, generative-AI hosting services, and even manufacturers of devices that record sound, images, or video. It shifts the focus from who creates AI to who distributes or hosts it, meaning that startups hosting models or building creative tools must comply.

From 2027, platforms must detect and label AI-generated or modified content and let users access provenance data: digital information proving when and how the content was created.

This provenance data must include the model provider, version, and creation date so that AI output remains traceable as it moves across platforms. From 2028, recording devices sold in California will have to embed this same type of authenticity data, helping distinguish real media from deepfakes.

These rules aim to rebuild trust in digital content. For companies, they mean that transparency systems and metadata tracking must now be part of product architecture. Failing to comply can bring heavy daily fines and reputational damage.

This law governs chatbots that simulate ongoing human conversation for social or emotional interaction. It requires operators to publish crisis-response protocols for users expressing distress or self-harm and to file annual safety reports with California’s Office of Suicide Prevention starting in 2027.

If a chatbot interacts with minors, it must clearly say it is AI, remind users periodically that it is not human, and restrict sexually explicit material. The goal is to protect young users from harm and emotional dependency.

Non-compliance can lead to lawsuits, statutory damages, and injunctions. Founders operating chatbots with adaptive or “friend-like” behavior must implement safeguards, publish safety information, and verify user age to avoid liability.

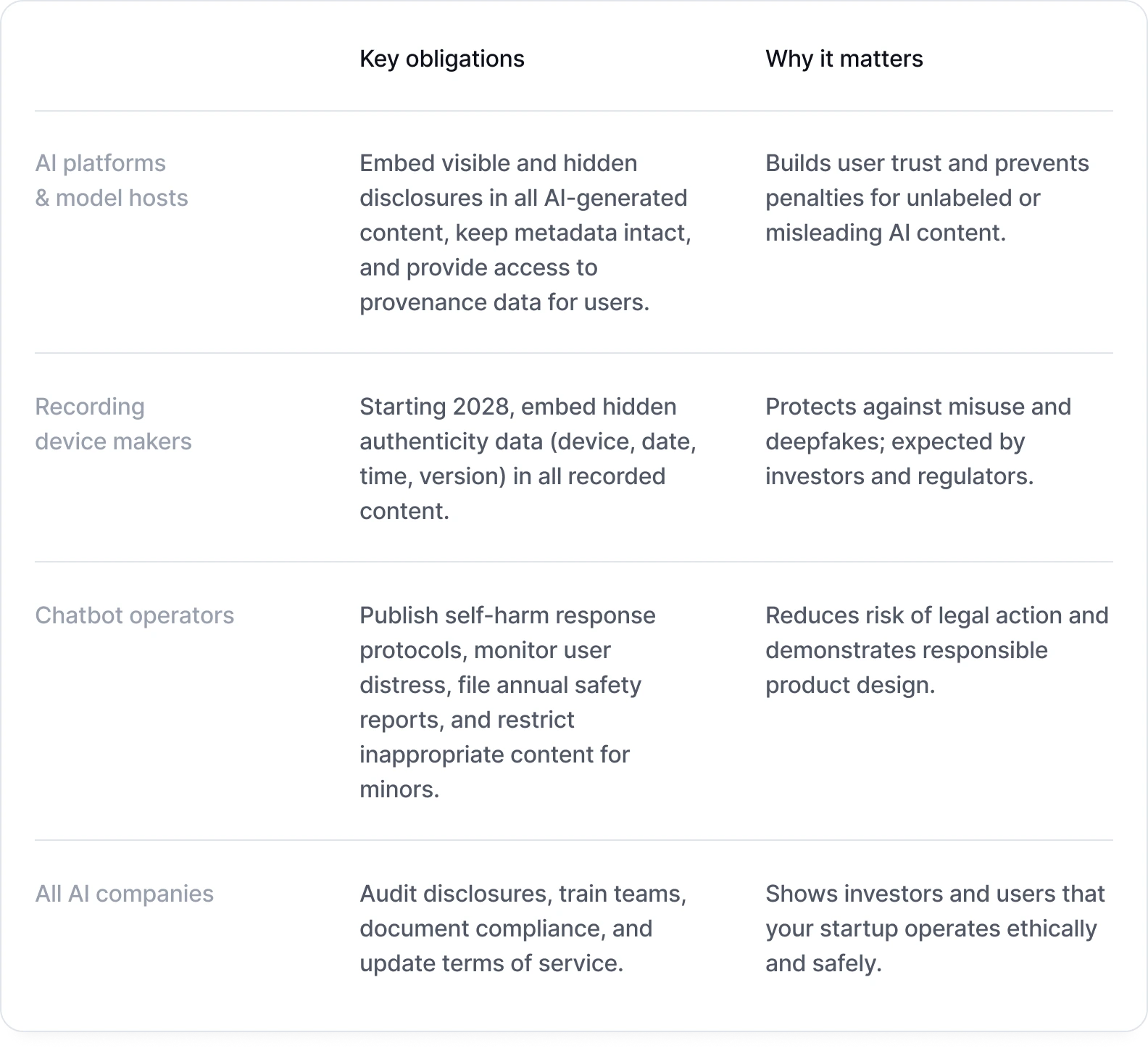

To help founders navigate the new rules, here’s a summary of the main compliance obligations under California’s AI Transparency Act and Companion Chatbot Law.

California’s new laws make transparency and safety central to how AI companies must operate. For founders, this is both a compliance challenge and a competitive opportunity. By building systems that label AI output, protect users, and prove authenticity, you not only meet legal standards but also create products that people can trust.