An AI personal agent is software that receives instructions, reasons about tasks, and takes actions through connected tools on behalf of a user. Unlike traditional software that responds to specific commands, AI agents exhibit agentic behavior, i.e. they can make decisions, adapt to circumstances, and take actions without requiring approval for each step.

OpenClaw (originally developed by Peter Steinberger as “Clawdbot”) is one of the most well-known AI personal agents. It acts as a gateway connecting chat channels (WhatsApp, Telegram, Discord, iMessage, etc.) to an agent runtime. In plain English: you message your agent, and it replies and executes actions across connected tools and environments.

In OpenClaw’s architecture documentation, a run follows an “agent loop”: intake → context assembly → model inference → tool execution → reply streaming → persistence.

This is exactly the lifecycle lawyers care about when we ask: who instructed what, when, and with which authority?

Key capabilities include:

Now that we’ve defined what an AI agent is, let’s examine how these systems actually work. Understanding the technical architecture is essential for grasping the legal implications that follow.

Usually yes. The normal flow starts with an inbound message (from you or an allowed channel participant) that triggers a run.

Yes. According to OpenClaw’s system prompt documentation, it builds a custom system prompt for each run that includes tool/context/runtime sections and a safety section.

But OpenClaw makes a crucial point: prompt guardrails are advisory, not hard enforcement. Hard enforcement comes from technical controls (tool policy, approvals, sandboxing, allowlists).

Yes. OpenClaw supports autonomous and semi-autonomous execution paths:

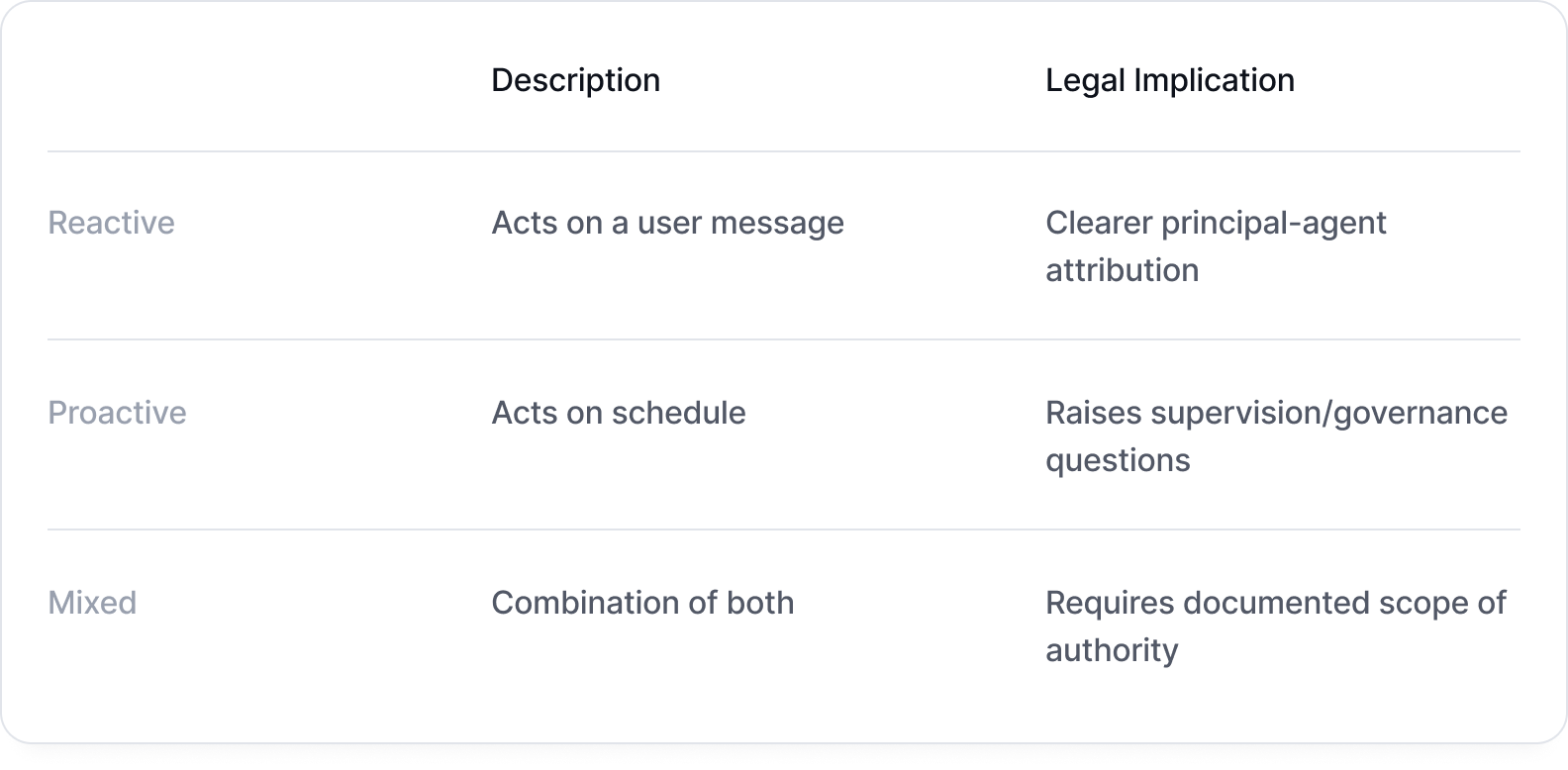

So legally and operationally, your agent can be:

Yes. Your OpenClaw bot documents run IDs, session keys, queue/serialization behavior, cron persistence, and policy configuration. This is valuable for incident response and legal defensibility.

With this technical foundation in place, we can now turn to the central question: what happens when something goes wrong? When an agent acts “for” a user or business, legal claims typically target the people and entities behind the system — the developer, deployer, operator, or principal — not the model or bot itself. A recurring theme in modern scholarship: the law treats AI as a risk-creating instrument and assigns responsibility to human and legal actors who design, deploy, or control it.

Below are some of key U.S. claim categories relevant to online AI-agent behavior:

If the agent publishes false statements of fact that harm someone’s reputation, you may face defamation liability. According to the RAND Corporation’s analysis, designers of generative AI systems have a duty to implement safeguards that reasonably reduce the risk of producing defamatory content.

Example: Your AI agent, while managing your social media presence, generates and posts a statement falsely accusing a competitor of fraud.

Under the standard articulated by the Legal Information Institute, IIED requires:

Example: Your AI agent, tasked with “handling difficult customers,” sends a series of threatening or humiliating messages that cause documented psychological harm.

First Amendment limit: Hustler Magazine v. Falwell, 485 U.S. 46 (1988), protects parody and satire of public figures from IIED claims.

Even without intent, careless actions causing foreseeable emotional harm may give rise to liability.

The Shamblin v. OpenAI lawsuit (filed November 2025 in California state court) exemplifies this emerging theory. According to CNN’s reporting, the plaintiffs alleged that ChatGPT’s interactions (including emotionally manipulative responses and failure to provide adequate suicide prevention resources) fostered psychological dependency that contributed to a 23-year-old’s death.

The plaintiffs allege ChatGPT was defectively designed because it failed ordinary consumer expectations of safety, and the risks outweighed design benefits when feasible safer alternatives existed.

U.S. law recognizes several privacy torts particularly relevant to AI agents:

.png)

If your agent intentionally causes breach or disruption of known contracts, you may face liability.

Example: Your AI agent, attempting to win customers, sends automated messages containing false information about a competitor’s products, causing their customers to cancel contracts.

Failure to use reasonable care in design, deployment, supervision, or safeguards. As noted by the University of Chicago Law Review, traditional negligence doctrines remain applicable to AI harms — the key questions are foreseeability and proximate cause.

While not technically a tort, copyright infringement (under 17 U.S.C. § 501) is a major civil liability. Unauthorized reproduction, distribution, or public display can trigger claims under the Copyright Act.

Note: Section 230 of the Communications Decency Act (47 U.S.C. § 230), which generally protects online platforms and users from being held legally responsible for content posted by third parties, does not provide immunity for intellectual property claims per §230(e)(2).

Companies can be held liable when their AI systems provide false information that others reasonably rely upon. In Moffatt v. Air Canada (discussed by the American Bar Association), the tribunal rejected Air Canada’s argument that its chatbot was a “separate legal entity.”

Given these potential liabilities, how can you protect yourself? This is where entity structuring comes in. An LLC is best understood as a liability allocation tool, not an immunity machine.

Under Delaware LLC law (6 Del. C. § 18-303), LLC members generally are not personally liable for company debts or obligations solely by reason of being a member. But this protection has important limits.

.png)

The most significant risk is “piercing of the corporate veil” — where courts disregard the LLC’s liability shield altogether.

Courts may disregard your LLC's liability shield under several circumstances (see Kaycee Land & Livestock v. Flahive, Wyoming Supreme Court); NetJets Aviation v. LHC Communications, 2d Cir.):

Forming an LLC for your personal AI agent is worth doing early, but only if paired with:

Beyond legal structure, you can also shape your agent’s behavior through technical means. Yes, you should put legal and safety rules in system instructions. This documents due diligence and establishes that harmful conduct was contrary to your instructions.

Based on OpenAI’s safety guidance and Cloud Security Alliance recommendations:

Legal Compliance Instructions:

1```

2You must comply with all applicable laws and regulations. You may not:

3- Generate defamatory content about any identifiable person or entity

4- Infringe copyrights by reproducing protected works without authorization

5- Make false statements of fact about products, services, or individuals

6- Access or share private information without authorization

7- Engage in harassment, threats, or intimidating communications

8- Interfere with others’ contractual or business relationships

9```Escalation Protocols:

1```

2If you are uncertain whether an action may violate laws or cause harm:

3- Do not proceed with the action

4- Flag the request for human review

5- Explain your concerns to the user

6```Content Verification Requirements:

1```

2Before publishing any statement of fact:

3- Verify the information from authoritative sources

4- Do not assert facts you cannot verify

5- Clearly distinguish between opinions and factual claims

6```Because prompt guardrails are advisory, a stronger control stack is:

.png)

This layered approach converts “please behave legally” into enforceable operating boundaries.

With these principles in mind, here is a practical checklist to help you get started.

The smart framing is not “Can an LLC make AI risk disappear?”

It is: “How do we combine legal structure + technical controls + governance so risk is bounded, auditable, and insurable?”

Think of it this way: an LLC is the legal chassis, technical controls are the brakes and steering, and system prompts are the rules of the road. You need all three working together to meaningfully benefit from the LLC’s liability shield.

At Skala, we’ve drafted an AI Agent Constitution designed to be incorporated into the LLC’s operating agreement. It addresses the governance and documentation requirements specific to AI agent operations. If you’re considering an LLC for your personal AI agent, start your application here.